Like GemFire 7 , GemFireXD now includes Pulse. GemFire XD Pulse

is a Web Application that provides a graphical dashboard for monitoring

vital, real-time health and performance of GemFire XD clusters,

members, and tables.

Use Pulse

to examine total memory, CPU, and disk space used by members, uptime

statistics, client connections, WAN connections, query statistics, and

critical notifications. Pulse

communicates with a GemFire XD JMX manager to provide a complete view

of your GemFire XD deployment. You can drill down from a high-level

cluster view to examine individual members and tables within a member.

By default, GemFire XD Pulse runs in a Tomcat server container that is embedded in a GemFire XD JMX manager node which you can enable by starting your locator as follows.

sqlf locator start -peer-discovery-address=localhost -peer-discovery-port=41111 -jmx-manager-start=true -jmx-manager-http-port=7075 -conserve-sockets=false -client-bind-address=localhost -client-port=1527 -dir=locator -sync=false

Then you just need to start a browser, point to http://locator-ip:7075/pulse and login as "admin/admin".

Screen Shot Below.

Search This Blog

Friday 20 December 2013

Thursday 19 December 2013

User Defined Types (UTS's) in Pivotal GemFireXD

The CREATE TYPE statement creates a user-defined type (UDT). A UDT is

a serializable Java class whose instances are stored in columns. The class must

implement the

java.io.Serializable interface. In this example below we create a TYPE with just one string property to highlight how it's done and then how we can create a FUNCTION to allow us to insert the TYPE using sqlf command line.

1. Create 2 Java classes as shown below. One is our UDT class while the other is used to create an instance of it and expose it as a FUNCTION

SqlfireString.java

SqlfireStringFactory.java

2. Create TYPE as shown below

3. Create FUNCTION to enable us to use TYPE

4. Create TABLE using TYPE and insert data

Note: When using JDBC you would use a PreparedStatement and setObject method to add the TYPE column as shown below.

More Information

http://gemfirexd-05.run.pivotal.io/index.jsp?topic=/com.pivotal.gemfirexd.0.5/reference/language_ref/rrefsqljcreatetype.html

1. Create 2 Java classes as shown below. One is our UDT class while the other is used to create an instance of it and expose it as a FUNCTION

SqlfireString.java

package pas.au.apples.sqlfire.types;

public class SqlfireString implements java.io.Serializable

{

public String value;

public SqlfireString()

{

}

public SqlfireString(String value)

{

this.value = value;

}

@Override

public String toString()

{

return value;

}

}

SqlfireStringFactory.java

package pas.au.apples.sqlfire.types;

public class SqlfireStringFactory

{

public static SqlfireString newInstance (String s)

{

return new SqlfireString(s);

}

}

2. Create TYPE as shown below

CREATE TYPE LARGE_STRING EXTERNAL NAME 'pas.au.apples.sqlfire.types.SqlfireString' LANGUAGE JAVA;

3. Create FUNCTION to enable us to use TYPE

CREATE FUNCTION udt_large_string(VARCHAR(32672)) RETURNS LARGE_STRING LANGUAGE JAVA PARAMETER STYLE JAVA NO SQL EXTERNAL NAME 'pas.au.apples.sqlfire.types.SqlfireStringFactory.newInstance';

4. Create TABLE using TYPE and insert data

create table udt_table (id int, text LARGE_STRING);

insert into udt_table values (1, udt_large_string('pas'));

Note: When using JDBC you would use a PreparedStatement and setObject method to add the TYPE column as shown below.

SqlfireString udtLargeString = new SqlfireString("pas");

pstmt.setObject(1, udtLargeString);

More Information

http://gemfirexd-05.run.pivotal.io/index.jsp?topic=/com.pivotal.gemfirexd.0.5/reference/language_ref/rrefsqljcreatetype.html

Saturday 7 December 2013

Spring HikariCP with Pivotal GemFireXD

Previously I blogged about using the HikariCP with Pivotal GemFireXD as per the post below.

http://theblasfrompas.blogspot.com.au/2013/12/hikaricp-connection-pool-with-pivotal.html

In this example I show how you would use this Connection Pool with Spring.

1. Ensure your using version 1.2.1 of HikariCP as it provides the setDataSourceProperties() setter to HikariConfig to allow easier configuration though Spring. Example below is for Maven.

2. Create a spring configuration XML file as shown below.

3. Create a datasource.properties as shown below.

portNumber=1527

serverName=192.168.1.6

user=app

password=app

4. Finally access with code as follows

ApplicationContextHolder.java

Accessing as follows

http://theblasfrompas.blogspot.com.au/2013/12/hikaricp-connection-pool-with-pivotal.html

In this example I show how you would use this Connection Pool with Spring.

1. Ensure your using version 1.2.1 of HikariCP as it provides the setDataSourceProperties() setter to HikariConfig to allow easier configuration though Spring. Example below is for Maven.

<dependencies>

<dependency>

<groupId>com.zaxxer</groupId>

<artifactId>HikariCP</artifactId>

<version>1.2.1</version>

<scope>compile</scope>

</dependency>

2. Create a spring configuration XML file as shown below.

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:util="http://www.springframework.org/schema/util"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-3.1.xsd">

<bean id="hikariConfig" class="com.zaxxer.hikari.HikariConfig">

<property name="maximumPoolSize" value="10" />

<property name="minimumPoolSize" value="2" />

<property name="dataSourceClassName"

value="com.vmware.sqlfire.internal.jdbc.ClientDataSource" />

<property name="dataSourceProperties" ref="props" />

<property name="poolName" value="springHikariCP" />

</bean>

<bean id="dataSource" class="com.zaxxer.hikari.HikariDataSource">

<constructor-arg ref="hikariConfig" />

</bean>

<util:properties id="props" location="classpath:datasource.properties"/>

</beans>

3. Create a datasource.properties as shown below.

portNumber=1527

serverName=192.168.1.6

user=app

password=app

4. Finally access with code as follows

ApplicationContextHolder.java

package pivotal.au.gemfirexd.demos.connectionpool.spring;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

public class ApplicationContextHolder

{

private static ClassPathXmlApplicationContext applicationContext;

public static ClassPathXmlApplicationContext getInstance()

{

if(applicationContext == null)

{

applicationContext = new ClassPathXmlApplicationContext("classpath:application-context.xml");

}

return applicationContext;

}

public static ApplicationContext getInstance(String contextLocation)

{

if(applicationContext == null)

{

applicationContext = new ClassPathXmlApplicationContext(contextLocation);

}

return applicationContext;

}

}

Accessing as follows

package pivotal.au.gemfirexd.demos.connectionpool.spring;

import com.zaxxer.hikari.HikariDataSource;

import org.springframework.context.ApplicationContext;

import java.sql.Connection;

import java.sql.SQLException;

import java.util.logging.Level;

import java.util.logging.Logger;

import java.sql.*;

public class TestHikariCPSpring

{

private ApplicationContext context;

private Logger logger = Logger.getLogger(this.getClass().getSimpleName());

public void run() throws SQLException

{

context = getContext();

HikariDataSource ds = (HikariDataSource) context.getBean("dataSource");

Connection conn = ds.getConnection();

.....

Thursday 5 December 2013

HikariCP (Connection Pool) with Pivotal GemFireXD

I decided to try out the HikariCP as per the link below it says it's the fastest Connection Pool and the most lightweight. It probably is so I thought I would set it up for GemFireXD.

Quote: There is nothing faster.1 There is nothing more correct. HikariCP is a "zero-overhead" production-quality connection pool. Coming in at roughly 50Kb, the library is extremely light.

https://github.com/brettwooldridge/HikariCP

Example Below.

1. Create a Pool class as follows

3. Run Test class and verify output as follows

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Dept[10, ACCOUNTING, NEW YORK]

Dept[20, RESEARCH, DALLAS]

Dept[30, SALES, CHICAGO]

Dept[40, OPERATIONS, BRISBANE]

Dept[50, MARKETING, ADELAIDE]

Dept[60, DEV, PERTH]

Dept[70, SUPPORT, SYDNEY]

Quote: There is nothing faster.1 There is nothing more correct. HikariCP is a "zero-overhead" production-quality connection pool. Coming in at roughly 50Kb, the library is extremely light.

https://github.com/brettwooldridge/HikariCP

Example Below.

1. Create a Pool class as follows

package pivotal.au.gemfirexd.demos.connectionpool;

import com.zaxxer.hikari.HikariConfig;

import com.zaxxer.hikari.HikariDataSource;

import java.sql.Connection;

import java.sql.SQLException;

public class HikariGFXDPool

{

private static HikariGFXDPool instance = null;

private HikariDataSource ds = null;

static

{

try

{

instance = new HikariGFXDPool();

}

catch (Exception e)

{

throw new RuntimeException(e.getMessage(), e);

}

}

private HikariGFXDPool()

{

HikariConfig config = new HikariConfig();

config.setMaximumPoolSize(10);

config.setMinimumPoolSize(2);

config.setDataSourceClassName("com.vmware.sqlfire.internal.jdbc.ClientDataSource");

config.addDataSourceProperty("portNumber", 1527);

config.addDataSourceProperty("serverName", "192.168.1.6");

config.addDataSourceProperty("user", "app");

config.addDataSourceProperty("password", "app");

ds = new HikariDataSource(config);

}

public static HikariGFXDPool getInstance ()

{

return instance;

}

public Connection getConnection() throws SQLException

{

return ds.getConnection();

}

}

2. Create a Test Class as follows

package pivotal.au.gemfirexd.demos.connectionpool;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.logging.Level;

import java.util.logging.Logger;

public class TestPool

{

private Logger logger = Logger.getLogger(this.getClass().getSimpleName());

public void run () throws SQLException

{

Statement stmt = null;

ResultSet rset = null;

Connection conn = null;

HikariGFXDPool pool = HikariGFXDPool.getInstance();

try

{

conn = pool.getConnection();

stmt = conn.createStatement();

rset = stmt.executeQuery("select * from dept order by 1");

while (rset.next())

{

System.out.println(String.format("Dept[%s, %s, %s]",

rset.getInt(1),

rset.getString(2),

rset.getString(3)));

}

}

catch (SQLException se)

{

logger.log(Level.SEVERE, se.getMessage());

}

finally

{

if (stmt != null)

{

stmt.close();

}

if (rset != null)

{

rset.close();

}

if (conn != null)

{

conn.close();

}

}

}

public static void main(String[] args) throws SQLException

{

TestPool test = new TestPool();

test.run();

}

}

3. Run Test class and verify output as follows

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Dept[10, ACCOUNTING, NEW YORK]

Dept[20, RESEARCH, DALLAS]

Dept[30, SALES, CHICAGO]

Dept[40, OPERATIONS, BRISBANE]

Dept[50, MARKETING, ADELAIDE]

Dept[60, DEV, PERTH]

Dept[70, SUPPORT, SYDNEY]

Wednesday 27 November 2013

Pivotal GemFireXD adding an INITCAP function to the distributed system

We recently released Pivotal GemFireXD BETA with the latest release of Pivotal HD. Pivotal GemFire XD is a memory-optimized, distributed data store that is designed for applications that have demanding scalability and availability requirements. With GemFire XD you can manage data entirely using in-memory tables, or you can persist very large tables to local disk store files or to a Hadoop Distributed File System (HDFS) for big data deployments.

In this post we show how to add the popular INITCAP function to GemFireXD or SQLFire.

1. Create a class with a public static method as shown below , this class method will do the INITCAP for us in SQL terms.

3. Create a stored function called INITCAP to use the method defined at #1

http://gopivotal.com/products/pivotal-hd

In this post we show how to add the popular INITCAP function to GemFireXD or SQLFire.

1. Create a class with a public static method as shown below , this class method will do the INITCAP for us in SQL terms.

package pivotal.au.accelarator.gemfirexd.sql.functions;

import org.apache.commons.lang3.StringUtils;

import org.apache.commons.lang3.text.WordUtils;

public class GemFireXdSqlFunctions

{

public static String initcap (String input)

{

return WordUtils.capitalizeFully(input);

}

}

2. Add the class to the classpath of the distributed system.3. Create a stored function called INITCAP to use the method defined at #1

CREATE FUNCTION INITCAP (data VARCHAR(32672)) RETURNS VARCHAR(32672) LANGUAGE JAVA EXTERNAL NAME 'pivotal.au.accelarator.gemfirexd.sql.functions.GemFireXdSqlFunctions.initcap' PARAMETER STYLE JAVA;4. Test as shown below.

sqlf> connect client 'localhost:1527';

sqlf> select initcap('pas APICELLA') from sysibm.sysdummy1;

1

--------------------------------------------------------------------------------------------------------------------------------

Pas Apicella

1 row selected

For more information on Pivotal GemFireXD see the link below.http://gopivotal.com/products/pivotal-hd

Thursday 14 November 2013

Emulating a BOOLEAN Data Type in Pivotal SQLFire

Not all SQL databases provide a Boolean data type and SQLFire which uses Derby has no BOOLEAN data type. In order to use BOOLEAN you can use a SMALLINT using values as 0 or 1 and then make API calls with the JDBC driver calling ResultSet.getBoolean() to give you TRUE or FALSE as shown below.

1. Create table as shown below with some sample rows.

2. Sample JDBC code to query table using ResultSet.getBoolean().

Output

1. Create table as shown below with some sample rows.

sqlf> run './sql/boolean.sql'; sqlf> drop table boolean_test; 0 rows inserted/updated/deleted sqlf> create table boolean_test (col1 smallint); 0 rows inserted/updated/deleted sqlf> insert into boolean_test values (1); 1 row inserted/updated/deleted sqlf> insert into boolean_test values (1); 1 row inserted/updated/deleted sqlf> insert into boolean_test values (0); 1 row inserted/updated/deleted sqlf> insert into boolean_test values (1); 1 row inserted/updated/deleted sqlf> insert into boolean_test values (0); 1 row inserted/updated/deleted sqlf> select * from boolean_test; COL1 ------ 0 1 0 1 1 5 rows selected

2. Sample JDBC code to query table using ResultSet.getBoolean().

public void run() throws SQLException

{

Connection conn = null;

Statement stmt = null;

ResultSet rset = null;

logger.log (Level.INFO, String.format("Connecting to SQLFire with url %s", url));

try

{

conn = DriverManager.getConnection(url);

logger.log(Level.INFO, conn.toString());

stmt = conn.createStatement();

rset = stmt.executeQuery("select * from boolean_test");

while (rset.next())

{

System.out.println("col1 = " + rset.getBoolean(1));

}

}

catch (SQLException se)

{

logger.log(Level.SEVERE, se.getMessage());

}

finally

{

if (conn != null)

{

conn.close();

}

if (stmt != null)

{

stmt.close();

}

if (rset != null)

{

rset.close();

}

}

}

Output

Nov 14, 2013 12:37:24 PM pas.au.apples.sqlfire.types.BooleanDemo run

INFO: Connecting to SQLFire with url jdbc:sqlfire://127.0.0.1:1527/

Nov 14, 2013 12:37:26 PM pas.au.apples.sqlfire.types.BooleanDemo run

INFO: NetConnection@12401369,agent: NetAgent@2cba5bdb:127.0.0.1[1528]

col1 = false

col1 = true

col1 = false

col1 = true

col1 = true

Monday 14 October 2013

Pivotal SQLFire Rowloader for Greenplum

When you use SQLFire as a cache, you can configure a SQL data

loader that is triggered to load data from a backend repository on a miss in

SQLFire. When an incoming query request for a uniquely-identified row cannot be

satisfied by the distributed cache, the loader is invoked to retrieve the data

from an external source. SQLFire locks the associated row and prevents

concurrent readers that are trying to fetch the same row from overloading the

backend database.

The example below is using Greenplum as the main source database. The example is based on this MYSQL example

http://blogs.vmware.com/vfabric/2012/01/using-sqlfire-as-a-read-only-cache-for-mysql.html

1. In this example we use a Greenplum table as follows, showing first 10 rows only, 500K of rows exist.

2. Add Postgress JDBC driver to the classpath of the SQLFire server nodes as well as the examples shipped with SQLFire in this case called "examples.jar". Example shown in the post above.

[Mon Oct 14 22:17:33 papicella@:~/sqlfire/final11build/vFabric_SQLFire_111_b42624/pasdemos/fire-rowloader/lib ] $ d

total 1248

-rw-r--r--@ 1 papicella staff 579785 13 Oct 20:01 postgresql-9.2-1002.jdbc4.jar

-rw-r--r-- 1 papicella staff 57203 13 Oct 20:05 examples.jar

drwxr-xr-x 4 papicella staff 136 13 Oct 20:05 ./

drwxr-xr-x 16 papicella staff 544 13 Oct 22:01 ../

3. From the table above that exists in a schema called "apples" so we must create the same table in SQLFire using the same schema name as shown below.

4. Create a row loader as shown below.

5. Run a test as shown below.

6. Finally the log file will show the connections being established , in this case 5 as a Minimum.

For more information refer to the link below.

http://pubs.vmware.com/vfabric53/index.jsp?topic=/com.vmware.vfabric.sqlfire.1.1/developers_guide/topics/cache/rowloader.html

The example below is using Greenplum as the main source database. The example is based on this MYSQL example

http://blogs.vmware.com/vfabric/2012/01/using-sqlfire-as-a-read-only-cache-for-mysql.html

1. In this example we use a Greenplum table as follows, showing first 10 rows only, 500K of rows exist.

gpadmin=# SELECT * FROM apples.person limit 10; id | name ----+---------- 2 | person2 4 | person4 6 | person6 8 | person8 10 | person10 12 | person12 14 | person14 16 | person16 18 | person18 20 | person20 (10 rows) Time: 11.947 ms

2. Add Postgress JDBC driver to the classpath of the SQLFire server nodes as well as the examples shipped with SQLFire in this case called "examples.jar". Example shown in the post above.

[Mon Oct 14 22:17:33 papicella@:~/sqlfire/final11build/vFabric_SQLFire_111_b42624/pasdemos/fire-rowloader/lib ] $ d

total 1248

-rw-r--r--@ 1 papicella staff 579785 13 Oct 20:01 postgresql-9.2-1002.jdbc4.jar

-rw-r--r-- 1 papicella staff 57203 13 Oct 20:05 examples.jar

drwxr-xr-x 4 papicella staff 136 13 Oct 20:05 ./

drwxr-xr-x 16 papicella staff 544 13 Oct 22:01 ../

3. From the table above that exists in a schema called "apples" so we must create the same table in SQLFire using the same schema name as shown below.

create schema apples; set schema apples; CREATE TABLE person (id int, name varchar(200), primary key (id)) PARTITION BY PRIMARY KEY EVICTION BY LRUCOUNT 500000 EVICTACTION DESTROY; show tables in apples;

4. Create a row loader as shown below.

set schema apples;

call sys.attach_loader('APPLES', 'PERSON', 'examples.JDBCRowLoader', '|url=jdbc:postgresql://127.0.0.1:5432/gpadmin|query-string=SELECT * FROM apples.person WHERE id=?|user=pas|password=pas|min-connections=5|max-connections=100');

5. Run a test as shown below.

sqlf> set schema apples; 0 rows inserted/updated/deleted sqlf> select * from person where id = 100; ID |NAME -------------------------------------------------------------------------------------------------------------------------------------------- 100 |person100 1 row selected sqlf> select * from person; ID |NAME -------------------------------------------------------------------------------------------------------------------------------------------- 100 |person100 1 row selected

6. Finally the log file will show the connections being established , in this case 5 as a Minimum.

[info 2013/10/14 21:47:16.600 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=1) JDBCRowLoader initialized. [info 2013/10/14 21:47:16.600 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=2) user: pas [info 2013/10/14 21:47:16.600 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=3) password: xxx [info 2013/10/14 21:47:16.600 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=4) url: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:47:16.601 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=5) min-connections: 5 [info 2013/10/14 21:47:16.601 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=6) max-connections: 100 [info 2013/10/14 21:47:16.601 EST <Pooled Waiting Message Processor 2> tid=0x52] (tid=11 msgId=7) query-string: SELECT * FROM apples.person WHERE id=? [info 2013/10/14 21:47:16.604 EST <Pooled Waiting Message Processor 2> tid=0x52] AbstractSqlfReplayableMessage: Successfully executed message with fields: SqlfSetLoaderMessage@6127ffd7(processorId=53; processorType=77;posDup=false; replayKey=201; schema = APPLES; table = PERSON; implementation = examples.JDBCRowLoader; initInfoStr = |url=jdbc:postgresql://127.0.0.1:5432/gpadmin|query-string=SELECT * FROM apples.person WHERE id=?|user=pas|password=pas|min-connections=5|max-connections=100) [info 2013/10/14 21:48:55.513 EST <Pooled Waiting Message Processor 3> tid=0x53] Initializing region _B__APPLES_PERSON_100 [info 2013/10/14 21:48:55.530 EST <PartitionedRegion Message Processor2> tid=0x56] (tid=12 msgId=8) JDBCRowLoader invoked to fetch from schema <APPLES> on table <PERSON>. [info 2013/10/14 21:48:55.530 EST <PartitionedRegion Message Processor2> tid=0x56] (tid=12 msgId=9) primary key element 0: 100 [info 2013/10/14 21:48:55.566 EST <pool-1-thread-6> tid=0x5c] (tid=13 msgId=10) Successful connection to target database: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:48:55.566 EST <pool-1-thread-1> tid=0x57] (tid=15 msgId=12) Successful connection to target database: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:48:55.566 EST <pool-1-thread-2> tid=0x58] (tid=18 msgId=15) Successful connection to target database: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:48:55.566 EST <pool-1-thread-5> tid=0x5b] (tid=14 msgId=11) Successful connection to target database: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:48:55.566 EST <pool-1-thread-3> tid=0x59] (tid=16 msgId=13) Successful connection to target database: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:48:55.566 EST <pool-1-thread-4> tid=0x5a] (tid=17 msgId=14) Successful connection to target database: jdbc:postgresql://127.0.0.1:5432/gpadmin [info 2013/10/14 21:48:55.791 EST <PartitionedRegion Message Processor2> tid=0x56] (tid=12 msgId=16) Executing query SELECT * FROM apples.person WHERE id=100 [info 2013/10/14 21:48:55.829 EST <PartitionedRegion Message Processor2> tid=0x56] (tid=12 msgId=17) Query succeeded

For more information refer to the link below.

http://pubs.vmware.com/vfabric53/index.jsp?topic=/com.vmware.vfabric.sqlfire.1.1/developers_guide/topics/cache/rowloader.html

Friday 4 October 2013

Spring MVC Rest - Content Negotiation using JSON/XML

In this example we will show how we can determine which data format to return writing just a single controller method. In this example we either return XML or JSON and the system knows whether to convert to XML or JSON because of content negotiation. This example is based on the example provided by Paul on the link below.

I have taken what Paul described into a working example of my own using Content Negotiation with Spring MVC Rest support.

http://spring.io/blog/2013/05/11/content-negotiation-using-spring-mvc

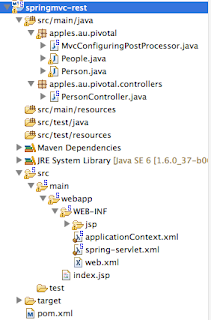

The project I created looks as follows

The complete code for this example exists on GitHub as follows

https://github.com/papicella/SpringMVCRest-ContentNegotiation

1. Create the required pom.xml dependancy elements as shown below.

pom.xml

2. Create a Person class as follows

Person.java

3. Create a People class which provides a list of Person objects, we do this so we can return XML as a List doesn't work directly with JAXB

People.java

4. Create a controller as follows, there is a request mapping for HTML as well here with the same path, which is the default, in order not to duplicate code we call the JSON/XML method from the HTML method itself.

PersonController.java

5. Create a Spring XML file as follows

applicationContext.xml

6. Create a class to enable pretty print for JSON data

MvcConfiguringPostProcessor.java

7. Finally create a HTML view page

people.jsp

8. Run the application and access the 3 supported views with request mapping path of "/people"

HTML - http://localhost:8080/springmvc-rest/apples/people

JSON - http://localhost:8080/springmvc-rest/apples/people.json

XML - http://localhost:8080/springmvc-rest/apples/people.xml

I have taken what Paul described into a working example of my own using Content Negotiation with Spring MVC Rest support.

http://spring.io/blog/2013/05/11/content-negotiation-using-spring-mvc

The project I created looks as follows

The complete code for this example exists on GitHub as follows

https://github.com/papicella/SpringMVCRest-ContentNegotiation

1. Create the required pom.xml dependancy elements as shown below.

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>springmvc-rest</groupId>

<artifactId>springmvc-rest</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>war</packaging>

<properties>

<spring.version>3.2.4.RELEASE</spring.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-aop</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>jstl</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

<version>3.0.1</version>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

<version>3.0.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.codehaus.jackson</groupId>

<artifactId>jackson-mapper-asl</artifactId>

<version>1.9.4</version>

</dependency>

<dependency>

<groupId>javax.xml.bind</groupId>

<artifactId>jaxb-api</artifactId>

<version>2.2.6</version>

</dependency>

<dependency>

<groupId>javax.xml</groupId>

<artifactId>jaxb-impl</artifactId>

<version>2.1</version>

</dependency>

</dependencies>

</project>

2. Create a Person class as follows

Person.java

package apples.au.pivotal;

import java.io.Serializable;

import javax.xml.bind.annotation.XmlRootElement;

@XmlRootElement

public class Person implements Serializable

{

private int id;

private String firstName;

private String lastName;

public Person()

{

// TODO Auto-generated constructor stub

}

public Person(int id, String firstName, String lastName) {

super();

this.id = id;

this.firstName = firstName;

this.lastName = lastName;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getFirstName() {

return firstName;

}

public void setFirstName(String firstName) {

this.firstName = firstName;

}

public String getLastName() {

return lastName;

}

public void setLastName(String lastName) {

this.lastName = lastName;

}

@Override

public String toString() {

return "Person [id=" + id + ", firstName=" + firstName + ", lastName="

+ lastName + "]";

}

}

3. Create a People class which provides a list of Person objects, we do this so we can return XML as a List doesn't work directly with JAXB

People.java

package apples.au.pivotal;

import java.util.List;

import javax.xml.bind.annotation.XmlElement;

import javax.xml.bind.annotation.XmlRootElement;

@XmlRootElement(name="people")

@SuppressWarnings("serial")

public class People

{

private List<Person> people;

protected People() {} // Keep JAXB happy

public People(List<Person> people)

{

this.people = people;

}

@XmlElement(name="person")

public List<Person> getPeople()

{

return people;

}

}

4. Create a controller as follows, there is a request mapping for HTML as well here with the same path, which is the default, in order not to duplicate code we call the JSON/XML method from the HTML method itself.

PersonController.java

package apples.au.pivotal.controllers;

import java.util.Arrays;

import java.util.List;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.springframework.http.HttpStatus;

import org.springframework.stereotype.Controller;

import org.springframework.ui.Model;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.ResponseStatus;

import apples.au.pivotal.People;

import apples.au.pivotal.Person;

@Controller

public class PersonController

{

private static List<Person> personList;

static

{

personList =

Arrays.asList(new Person[]

{ new Person(1, "Pas", "Apicella"),

new Person(2, "Lucia", "Apicella"),

new Person(3, "Lucas", "Apicella"),

new Person(4, "Siena", "Apicella")

});

}

@RequestMapping(value="/people",

method = RequestMethod.GET,

produces={"application/xml", "application/json"})

@ResponseStatus(HttpStatus.OK)

public @ResponseBody People listWithJSON()

{

return new People(personList);

}

// View-based method

@RequestMapping(value = "/people", method = RequestMethod.GET)

public String listWithView(Model model, HttpServletResponse response, HttpServletRequest request)

{

// Call RESTful method to avoid repeating code

model.addAttribute("peopleList", listWithJSON().getPeople());

// Return the view to use for rendering the response

return "people";

}

}

5. Create a Spring XML file as follows

applicationContext.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:util="http://www.springframework.org/schema/util"

xmlns:mvc="http://www.springframework.org/schema/mvc"

xsi:schemaLocation="http://www.springframework.org/schema/mvc http://www.springframework.org/schema/mvc/spring-mvc-3.2.xsd

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-3.2.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.2.xsd">

<!-- Activates various annotations to be detected in bean classes -->

<context:annotation-config />

<!-- Scans the classpath for annotated components that will be auto-registered as Spring beans.

For example @Controller and @Service. Make sure to set the correct base-package-->

<context:component-scan base-package="apples.au.pivotal.controllers" />

<!-- Configures the annotation-driven Spring MVC Controller programming model.

Note that, with Spring 3.0, this tag works in Servlet MVC only! -->

<bean id="cnManager"

class="org.springframework.web.accept.ContentNegotiationManagerFactoryBean">

<property name="favorPathExtension" value="true"/>

<property name="ignoreAcceptHeader" value="true" />

<property name="defaultContentType" value="text/html" />

<property name="useJaf" value="false"/>

<property name="mediaTypes">

<map>

<entry key="html" value="text/html" />

<entry key="json" value="application/json" />

<entry key="xml" value="application/xml" />

</map>

</property>

</bean>

<mvc:annotation-driven content-negotiation-manager="cnManager"/>

<bean class="apples.au.pivotal.MvcConfiguringPostProcessor" />

</beans>

6. Create a class to enable pretty print for JSON data

MvcConfiguringPostProcessor.java

package apples.au.pivotal;

import org.springframework.beans.BeansException;

import org.springframework.beans.factory.config.BeanPostProcessor;

import org.springframework.http.converter.HttpMessageConverter;

import org.springframework.http.converter.json.MappingJackson2HttpMessageConverter;

import org.springframework.http.converter.json.MappingJacksonHttpMessageConverter;

/**

* The HTTP Message converters are created automatically by Spring. To perform

* additional configuration we use a bean post-processor.

*/

public class MvcConfiguringPostProcessor implements BeanPostProcessor {

/**

* Enable pretty print on any bean of type

* {@link MappingJacksonHttpMessageConverter} or

* {@link MappingJackson2HttpMessageConverter}.

*/

public Object postProcessBeforeInitialization(Object bean, String name) throws BeansException

{

if (bean instanceof HttpMessageConverter<?>)

if (bean instanceof MappingJacksonHttpMessageConverter) {

((MappingJacksonHttpMessageConverter) bean).setPrettyPrint(true);

}

else if (bean instanceof MappingJackson2HttpMessageConverter) {

((MappingJackson2HttpMessageConverter) bean).setPrettyPrint(true);

}

return bean;

}

public Object postProcessAfterInitialization(Object bean, String beanName) throws BeansException

{

// Nothing to do

return bean;

}

}

7. Finally create a HTML view page

people.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<%@ taglib uri="http://java.sun.com/jsp/jstl/core" prefix="c"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Spring MVC Rest Demo</title>

</head>

<body>

<h2>Spring MVC Rest Demo</h2>

<table border="1">

<tr>

<th>Id</th>

<th>First Name</th>

<th>Last Name</th>

</tr>

<c:forEach var="row" varStatus="loop" items="${peopleList}">

<tr>

<td>${row.id}</td>

<td>${row.firstName}</td>

<td>${row.lastName}</td>

</tr>

</c:forEach>

</table>

<p />

Created by Pas Apicella

</body>

</html>

8. Run the application and access the 3 supported views with request mapping path of "/people"

HTML - http://localhost:8080/springmvc-rest/apples/people

JSON - http://localhost:8080/springmvc-rest/apples/people.json

XML - http://localhost:8080/springmvc-rest/apples/people.xml

Wednesday 2 October 2013

Spring Data GemFire GemfireTemplate

As with many other high-level abstractions provided by the Spring projects, Spring Data GemFire provides a template that simplifies GemFire data access. The class provides several one-line methods, for common region operations but also the ability to execute code against the native GemFire API without having to deal with GemFire checked exceptions for example through the GemfireCallback.

The template class requires a GemFire Region instance and once configured is thread-safe and should be reused across multiple classes:

Example:

In this example we are using and existing GemFire cluster which has DEPT / EMP objects already existing in the distributed system as shown below.

1. client.xml (GemFire Client cache XML file)

2. application-context.xml

3. TestGemFireTemplate.java

Output as shown below.

Starting Spring Data GemFire Template Test....

-> template.query() test

Employee [empno=7380, name=BLACK, job=CLERK, deptno=40]

Employee [empno=7381, name=BROWN, job=SALESMAN, deptno=40]

Employee [empno=7373, name=SIENA, job=CLERK, deptno=40]

-> template.get(key) test

Employee [empno=7373, name=SIENA, job=CLERK, deptno=40]

-> template.find() test

Employee [empno=7369, name=SMITH, job=CLERK, deptno=20]

Employee [empno=7375, name=ROB, job=CLERK, deptno=30]

Employee [empno=7376, name=ADRIAN, job=CLERK, deptno=20]

Employee [empno=7380, name=BLACK, job=CLERK, deptno=40]

Employee [empno=7377, name=ADAM, job=CLERK, deptno=20]

Employee [empno=7379, name=FRANK, job=CLERK, deptno=10]

Employee [empno=7373, name=SIENA, job=CLERK, deptno=40]

All done....

Example:

In this example we are using and existing GemFire cluster which has DEPT / EMP objects already existing in the distributed system as shown below.

gfsh>query --query="select * from /departments"; Result : true startCount : 0 endCount : 20 Rows : 4 deptno | name ------ | ---------- 40 | OPERATIONS 30 | SALES 10 | ACCOUNTING 20 | RESEARCH NEXT_STEP_NAME : END gfsh>query --query="select * from /employees"; Result : true startCount : 0 endCount : 20 Rows : 13 empno | deptno | name | job ----- | ------ | -------- | --------- 7380 | 40 | BLACK | CLERK 7373 | 40 | SIENA | CLERK 7377 | 20 | ADAM | CLERK 7370 | 10 | APPLES | MANAGER 7381 | 40 | BROWN | SALESMAN 7379 | 10 | FRANK | CLERK 7375 | 30 | ROB | CLERK 7371 | 10 | APICELLA | SALESMAN 7374 | 10 | LUCAS | SALESMAN 7378 | 20 | SALLY | MANAGER 7372 | 30 | LUCIA | PRESIDENT 7376 | 20 | ADRIAN | CLERK 7369 | 20 | SMITH | CLERK NEXT_STEP_NAME : END

1. client.xml (GemFire Client cache XML file)

<!DOCTYPE client-cache PUBLIC

"-//GemStone Systems, Inc.//GemFire Declarative Caching 7//EN"

"http://www.gemstone.com/dtd/cache7_0.dtd">

<client-cache>

<pdx>

<pdx-serializer>

<class-name>com.gemstone.gemfire.pdx.ReflectionBasedAutoSerializer</class-name>

<parameter name="classes">

<string>pivotal\.au\.se\.deptemp\.beans\..*</string>

</parameter>

</pdx-serializer>

</pdx>

<!-- No cache storage in the client region because of the PROXY client region shortcut setting. -->

<region name="departments">

<region-attributes refid="PROXY" pool-name="gfPool" />

</region>

<region name="employees">

<region-attributes refid="PROXY" pool-name="gfPool" />

</region>

</client-cache>

2. application-context.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:gfe-data="http://www.springframework.org/schema/data/gemfire"

xmlns:gfe="http://www.springframework.org/schema/gemfire"

xmlns:util="http://www.springframework.org/schema/util"

xsi:schemaLocation="http://www.springframework.org/schema/gemfire http://www.springframework.org/schema/gemfire/spring-gemfire-1.3.xsd

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-3.1.xsd

http://www.springframework.org/schema/data/gemfire http://www.springframework.org/schema/data/gemfire/spring-data-gemfire-1.3.xsd">

<gfe:client-cache

id="client-cache"

cache-xml-location="classpath:client.xml"

pool-name="gfPool"

properties-ref="props" />

<gfe:pool id="gfPool" max-connections="10">

<gfe:locator host="localhost" port="10334"/>

</gfe:pool>

<gfe:lookup-region id="departments" name="departments" cache-ref="client-cache"/>

<gfe:lookup-region id="employees" name="employees" cache-ref="client-cache"/>

<gfe-data:repositories base-package="pivotal.au.se.deptemp.repos" />

<util:properties id="props" location="classpath:gemfire.properties"/>

</beans>

Here we simply import the client.xml file and then define the regions we wish to access from GemFire.3. TestGemFireTemplate.java

package pivotal.au.se.deptemp.test;

import java.util.Collection;

import org.springframework.context.ConfigurableApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import org.springframework.data.gemfire.GemfireTemplate;

import pivotal.au.se.deptemp.beans.Employee;

import com.gemstone.gemfire.cache.Region;

import com.gemstone.gemfire.cache.query.SelectResults;

public class TestGemFireTemplate

{

private ConfigurableApplicationContext ctx = null;

public TestGemFireTemplate()

{

ctx = new ClassPathXmlApplicationContext("application-context.xml");

}

public void run ()

{

@SuppressWarnings("unchecked")

GemfireTemplate empTemplate = new GemfireTemplate((Region) ctx.getBean("employees"));

System.out.println("-> template.query() test \n ");

SelectResults<?> results = empTemplate.query("deptno = 40");

Collection<Employee> emps = (Collection<Employee>) results.asList();

for (Employee e: emps)

{

System.out.println(e.toString());

}

System.out.println("\n-> template.get(key) test \n ");

Employee emp = empTemplate.get("7373");

System.out.println(emp.toString());

System.out.println("\n-> template.find() test \n ");

SelectResults<Employee> clerkEmpResults =

empTemplate.find("SELECT * from /employees WHERE job=$1", "CLERK");

Collection<Employee> clerkEmps = (Collection<Employee>) clerkEmpResults.asList();

for (Employee e: clerkEmps)

{

System.out.println(e.toString());

}

}

public static void main(String[] args)

{

// TODO Auto-generated method stub

TestGemFireTemplate test = new TestGemFireTemplate();

System.out.println("\nStarting Spring Data GemFire Template Test.... \n");

test.run();

System.out.println("\nAll done.... ");

}

}

Output as shown below.

Starting Spring Data GemFire Template Test....

-> template.query() test

Employee [empno=7380, name=BLACK, job=CLERK, deptno=40]

Employee [empno=7381, name=BROWN, job=SALESMAN, deptno=40]

Employee [empno=7373, name=SIENA, job=CLERK, deptno=40]

-> template.get(key) test

Employee [empno=7373, name=SIENA, job=CLERK, deptno=40]

-> template.find() test

Employee [empno=7369, name=SMITH, job=CLERK, deptno=20]

Employee [empno=7375, name=ROB, job=CLERK, deptno=30]

Employee [empno=7376, name=ADRIAN, job=CLERK, deptno=20]

Employee [empno=7380, name=BLACK, job=CLERK, deptno=40]

Employee [empno=7377, name=ADAM, job=CLERK, deptno=20]

Employee [empno=7379, name=FRANK, job=CLERK, deptno=10]

Employee [empno=7373, name=SIENA, job=CLERK, deptno=40]

All done....

Thursday 26 September 2013

Functions in GemFire

You can execute data-independent functions or data-dependent functions

in vFabric GemFire. You run data-independent functions by targeting a specific

member or specific members in your distributed system, or by targeting logical

member groups on which to execute your function. If you are executing a

data-dependent function, you specify a region on which to execute the function.

Lets start with a very basic function which in this example takes a parameter of Type Map and inserts into into the Region using Region.putAll method.

ServerPutAllFunction.java

Cahe.xml showing how to define the function

Now lets connect to GFSH and display the functions available on all members.

Calling the function from a client would be done as follows

Lets start with a very basic function which in this example takes a parameter of Type Map and inserts into into the Region using Region.putAll method.

ServerPutAllFunction.java

package pivotal.au.gemfire.performance.functions;

import java.util.Map;

import java.util.Properties;

import com.gemstone.gemfire.cache.CacheFactory;

import com.gemstone.gemfire.cache.Declarable;

import com.gemstone.gemfire.cache.GemFireCache;

import com.gemstone.gemfire.cache.Region;

import com.gemstone.gemfire.cache.execute.FunctionAdapter;

import com.gemstone.gemfire.cache.execute.FunctionContext;

public class ServerPutAllFunction extends FunctionAdapter implements Declarable

{

private static final long serialVersionUID = 1L;

public static final String ID = "serverputall-function";

private GemFireCache cache;

public ServerPutAllFunction()

{

this.cache = CacheFactory.getAnyInstance();

}

@Override

public void init(Properties arg0)

{

// TODO Auto-generated method stub

}

@Override

public void execute(FunctionContext context)

{

Map testMap = (Map) context.getArguments();

Region region = this.cache.getRegion("testRegionFunction");

region.putAll(testMap);

context.getResultSender().lastResult(testMap.size());

}

@Override

public String getId()

{

// TODO Auto-generated method stub

return ID;

}

}

Cahe.xml showing how to define the function

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE cache PUBLIC "-//GemStone Systems, Inc.//GemFire Declarative Cache 7.0//EN"

"http://www.gemstone.com/dtd/cache7_0.dtd">

<cache is-server="true">

<cache-server port="0" notify-by-subscription="true"/>

<pdx read-serialized="false">

<pdx-serializer>

<class-name>com.gemstone.gemfire.pdx.ReflectionBasedAutoSerializer</class-name>

<parameter name="classes">

<string>pivotal\.au\.gemfire\.performance\..*</string>

</parameter>

</pdx-serializer>

</pdx>

<region name="testRegion">

<region-attributes data-policy="partition"

statistics-enabled="true"

concurrency-level="16">

<partition-attributes redundant-copies="1" total-num-buckets="113"/>

</region-attributes>

</region>

<region name="testRegionFunction">

<region-attributes data-policy="partition"

statistics-enabled="true"

concurrency-level="16">

<partition-attributes redundant-copies="1" total-num-buckets="113"/>

</region-attributes>

</region>

<function-service>

<function>

<class-name>pivotal.au.gemfire.performance.functions.SizeFunction</class-name>

</function>

<function>

<class-name>pivotal.au.gemfire.performance.functions.ServerPutAllFunction</class-name>

</function>

</function-service>

</cache>

Now lets connect to GFSH and display the functions available on all members.

gfsh>connect --locator=localhost[10334] Connecting to Locator at [host=localhost, port=10334] .. Connecting to Manager at [host=192-168-1-5.tpgi.com.au, port=1099] .. Successfully connected to: [host=192-168-1-5.tpgi.com.au, port=1099] Cluster-1 gfsh>list functions Member | Function ------- | --------------------- server1 | serverputall-function server1 | size-function server2 | serverputall-function server2 | size-function

Calling the function from a client would be done as follows

public void run() {

Map buffer = new HashMap();

Execution execution = null;

int dataSize = SAMPLE_SIZE / nThreads;

System.out.printf("Start: %d End: %d \n",(dataSize * (increment - 1)), (dataSize * increment));

for (int i = (dataSize * (increment - 1)); i < (dataSize * increment); i++) {

buffer.put(String.valueOf(i), new Test(i, "name" + i));

if (buffer.size() % BATCH_SIZE == 0) {

try {

execution = FunctionService.onServer(cache).withArgs(buffer);

ResultCollector<?, ?> collector = execution.execute(ServerPutAllFunction.ID);

counter += buffer.size();

buffer.clear();

} catch (Exception e) {

e.printStackTrace();

}

}

}

if (!buffer.isEmpty()) {

execution = FunctionService.onServer(cache).withArgs(buffer);

ResultCollector<?, ?> collector = execution.execute(ServerPutAllFunction.ID);

counter += buffer.size();

}

}

Monday 23 September 2013

Check which JDK's Installed on MAC Os X

Note for myself:

[Mon Sep 23 08:16:42 papicella@:~ ] $ /usr/libexec/java_home -V

Matching Java Virtual Machines (4):

1.7.0_25, x86_64: "Java SE 7" /Library/Java/JavaVirtualMachines/jdk1.7.0_25.jdk/Contents/Home

1.7.0_12, x86_64: "Java SE 7" /Library/Java/JavaVirtualMachines/jdk1.7.0_12.jdk/Contents/Home

1.6.0_51-b11-457, x86_64: "Java SE 6" /System/Library/Java/JavaVirtualMachines/1.6.0.jdk/Contents/Home

1.6.0_51-b11-457, i386: "Java SE 6" /System/Library/Java/JavaVirtualMachines/1.6.0.jdk/Contents/Home

/Library/Java/JavaVirtualMachines/jdk1.7.0_25.jdk/Contents/Home

[Mon Sep 23 08:16:42 papicella@:~ ] $ /usr/libexec/java_home -V

Matching Java Virtual Machines (4):

1.7.0_25, x86_64: "Java SE 7" /Library/Java/JavaVirtualMachines/jdk1.7.0_25.jdk/Contents/Home

1.7.0_12, x86_64: "Java SE 7" /Library/Java/JavaVirtualMachines/jdk1.7.0_12.jdk/Contents/Home

1.6.0_51-b11-457, x86_64: "Java SE 6" /System/Library/Java/JavaVirtualMachines/1.6.0.jdk/Contents/Home

1.6.0_51-b11-457, i386: "Java SE 6" /System/Library/Java/JavaVirtualMachines/1.6.0.jdk/Contents/Home

/Library/Java/JavaVirtualMachines/jdk1.7.0_25.jdk/Contents/Home

Wednesday 18 September 2013

Using Scala to connect to Greenplum

In this demo we use a basic Scala program to connect to Greenplum using the Postgres JDBC driver.

1. Create a scala program as shown below.

ScalaGreenplumDemo.scala

2. Compile it ensuring you have the Postgres JDBC jar file in the classpath which in this example is the directory where we are running from.

export CUR_DIR=`pwd`

scalac -classpath $CUR_DIR/postgresql-9.2-1002.jdbc4.jar:. ScalaGreenplumDemo.scala

3. Run it as follows

export CUR_DIR=`pwd`

scala -classpath $CUR_DIR/postgresql-9.2-1002.jdbc4.jar:. ScalaGreenplumDemo

Output

empno=7499, ename = ALLEN

empno=7521, ename = WARD

empno=7698, ename = BLAKE

empno=7782, ename = CLARK

empno=7788, ename = SCOTT

empno=7844, ename = TURNER

empno=7876, ename = ADAMS

empno=7900, ename = JAMES

empno=7902, ename = FORD

empno=7934, ename = MILLER

empno=7111, ename = LUCIA

empno=7113, ename = SIENA

empno=7369, ename = SMITH

empno=7566, ename = JONES

empno=7654, ename = MARTIN

empno=7839, ename = KING

empno=7933, ename = PAS

empno=7112, ename = LUCAS

1. Create a scala program as shown below.

ScalaGreenplumDemo.scala

import java.sql.DriverManager

import java.sql.Connection

object ScalaGreenplumDemo {

def main(args: Array[String]) {

val driver = "org.postgresql.Driver"

val url = "jdbc:postgresql://127.0.0.1:5432/gpadmin"

val username = "pas"

val password = "pas"

var connection:Connection = null

try {

// make the connection

Class.forName(driver)

connection = DriverManager.getConnection(url, username, password)

// create the statement, and run the select query

val statement = connection.createStatement()

val resultSet = statement.executeQuery("select * from scott.emp;")

while ( resultSet.next() ) {

val empno = resultSet.getString("empno")

val ename = resultSet.getString("ename")

println("empno=" + empno + ", ename = " + ename)

}

} catch {

case e: Throwable => e.printStackTrace

}

connection.close()

}

}

2. Compile it ensuring you have the Postgres JDBC jar file in the classpath which in this example is the directory where we are running from.

export CUR_DIR=`pwd`

scalac -classpath $CUR_DIR/postgresql-9.2-1002.jdbc4.jar:. ScalaGreenplumDemo.scala

export CUR_DIR=`pwd`

scala -classpath $CUR_DIR/postgresql-9.2-1002.jdbc4.jar:. ScalaGreenplumDemo

Output

empno=7499, ename = ALLEN

empno=7521, ename = WARD

empno=7698, ename = BLAKE

empno=7782, ename = CLARK

empno=7788, ename = SCOTT

empno=7844, ename = TURNER

empno=7876, ename = ADAMS

empno=7900, ename = JAMES

empno=7902, ename = FORD

empno=7934, ename = MILLER

empno=7111, ename = LUCIA

empno=7113, ename = SIENA

empno=7369, ename = SMITH

empno=7566, ename = JONES

empno=7654, ename = MARTIN

empno=7839, ename = KING

empno=7933, ename = PAS

empno=7112, ename = LUCAS

Monday 16 September 2013

Spring JDBC with PivotalHD and Hawq

HAWQ enables SQL for Hadoop ensuring we can use something like Spring JDBC as shown below. In this example we use the PivotalHD VM with data from a HAWQ append only table as shown below.

Code

Customer.java (POJO)

DAO : Constants.java

DAO : CustomerDAO.java

DAO : CustomerDAOImpl.java

application-context.xml

jdbc.properties

jdbc.driverClassName=org.postgresql.Driver

jdbc.url=jdbc:postgresql://172.16.62.142:5432/gpadmin

jdbc.username=gpadmin

jdbc.password=gpadmin

TestCustomerDAO.java

Output

http://blog.gopivotal.com/products/pivotal-hd-ga

This page dives deeper into the PHD VM with a walkthrough from data loading, map reduce and SQL queries.

http://pivotalhd.cfapps.io/getting-started/pivotalhd-vm.html

Finally, the following link is the direct download location of the VM discussed above above.

http://bitcast-a.v1.o1.sjc1.bitgravity.com/greenplum/pivotal-sw/pivotalhd_singlenodevm_101_v1.7z

gpadmin=# \dt

List of relations

Schema | Name | Type | Owner | Storage

-------------+-----------------------------+-------+---------+-------------

retail_demo | categories_dim_hawq | table | gpadmin | append only

retail_demo | customer_addresses_dim_hawq | table | gpadmin | append only

retail_demo | customers_dim_hawq | table | gpadmin | append only

retail_demo | date_dim_hawq | table | gpadmin | append only

retail_demo | email_addresses_dim_hawq | table | gpadmin | append only

retail_demo | order_lineitems_hawq | table | gpadmin | append only

retail_demo | orders_hawq | table | gpadmin | append only

retail_demo | payment_methods_hawq | table | gpadmin | append only

retail_demo | products_dim_hawq | table | gpadmin | append only

(9 rows)

gpadmin=# select * from customers_dim_hawq limit 5;

customer_id | first_name | last_name | gender

-------------+------------+-----------+--------

11371 | Delphine | Williams | F

5480 | Everett | Johnson | M

26030 | Dominique | Davis | M

41922 | Brice | Martinez | M

47265 | Iva | Wilson | F

(5 rows)

Time: 57.334 ms

Code

Customer.java (POJO)

package pivotal.au.hawq.beans;

public class Customer {

public String customerId;

public String firstName;

public String lastName;

public String gender;

public Customer()

{

}

public Customer(String customerId, String firstName, String lastName,

String gender) {

super();

this.customerId = customerId;

this.firstName = firstName;

this.lastName = lastName;

this.gender = gender;

}

..... getters/setters etc ....

DAO : Constants.java

package pivotal.au.hawq.dao;

public interface Constants

{

public static final String SELECT_CUSTOMER = "select * from retail_demo.customers_dim_hawq where customer_id = ?";

public static final String SELECT_FIRST_FIVE_CUSTOMERS = "select * from retail_demo.customers_dim_hawq limit 5";

}

DAO : CustomerDAO.java

package pivotal.au.hawq.dao;

import java.util.List;

import pivotal.au.hawq.beans.Customer;

public interface CustomerDAO

{

public Customer selectCustomer (String customerId);

public List<Customer> firstFiveCustomers();

}

DAO : CustomerDAOImpl.java

package pivotal.au.hawq.dao;

import java.util.ArrayList;

import java.util.List;

import javax.sql.DataSource;

import org.springframework.jdbc.core.BeanPropertyRowMapper;

import org.springframework.jdbc.core.JdbcTemplate;

import pivotal.au.hawq.beans.Customer;

public class CustomerDAOImpl implements CustomerDAO

{

private JdbcTemplate jdbcTemplate;

public void setDataSource(DataSource dataSource)

{

this.jdbcTemplate = new JdbcTemplate(dataSource);

}

public Customer selectCustomer(String customerId)

{

return (Customer) jdbcTemplate.queryForObject

(Constants.SELECT_CUSTOMER,

new Object[] { customerId },

new BeanPropertyRowMapper<Customer>( Customer.class));

}

public List<Customer> firstFiveCustomers()

{

List<Customer> customers = new ArrayList<Customer>();

customers = jdbcTemplate.query(Constants.SELECT_FIRST_FIVE_CUSTOMERS,

new BeanPropertyRowMapper<Customer>( Customer.class));

return customers;

}

}

application-context.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:jdbc="http://www.springframework.org/schema/jdbc"

xmlns:context="http://www.springframework.org/schema/context"

xsi:schemaLocation="http://www.springframework.org/schema/jdbc http://www.springframework.org/schema/jdbc/spring-jdbc-3.2.xsd

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.2.xsd">

<context:property-placeholder location="classpath:/jdbc.properties"/>

<bean id="pivotalHDDataSource" class="org.apache.commons.dbcp.BasicDataSource" destroy-method="close">

<property name="driverClassName" value="${jdbc.driverClassName}" />

<property name="url" value="${jdbc.url}" />

<property name="username" value="${jdbc.username}" />

<property name="password" value="${jdbc.password}" />

</bean>

<bean id="customerDAOImpl" class="pivotal.au.hawq.dao.CustomerDAOImpl">

<property name="dataSource" ref="pivotalHDDataSource" />

</bean>

</beans>

jdbc.properties

jdbc.driverClassName=org.postgresql.Driver

jdbc.url=jdbc:postgresql://172.16.62.142:5432/gpadmin

jdbc.username=gpadmin

jdbc.password=gpadmin

TestCustomerDAO.java

package pivotal.au.hawq.dao.test;

import java.util.List;

import java.util.logging.Level;

import java.util.logging.Logger;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import pivotal.au.hawq.beans.Customer;

import pivotal.au.hawq.dao.CustomerDAO;

public class TestCustomerDAO

{

private Logger logger = Logger.getLogger(this.getClass().getSimpleName());

private ApplicationContext context;

private static final String BEAN_NAME = "customerDAOImpl";

private CustomerDAO customerDAO;

public TestCustomerDAO()

{

context = new ClassPathXmlApplicationContext("application-context.xml");

customerDAO = (CustomerDAO) context.getBean(BEAN_NAME);

logger.log (Level.INFO, "Obtained customerDAOImpl BEAN...");

}

public void run()

{

System.out.println("Select single customer from HAWQ -> ");

Customer customer = customerDAO.selectCustomer("59047");

System.out.println(customer.toString());

System.out.println("Select five customers from HAWQ -> ");

List<Customer> customers = customerDAO.firstFiveCustomers();

for (Customer cust: customers)

{

System.out.println(cust.toString());

}

}

public static void main(String[] args)

{

// TODO Auto-generated method stub

TestCustomerDAO test = new TestCustomerDAO();

test.run();

}

}

Output

log4j:WARN No appenders could be found for logger (org.springframework.core.env.StandardEnvironment).

log4j:WARN Please initialize the log4j system properly.

Sep 16, 2013 9:59:09 PM pivotal.au.hawq.dao.test.TestCustomerDAO

INFO: Obtained customerDAOImpl BEAN...

Select single customer from HAWQ ->

Customer [customerId=59047, firstName=Olivia, lastName=Anderson, gender=F]

Select five customers from HAWQ ->

Customer [customerId=11371, firstName=Delphine, lastName=Williams, gender=F]

Customer [customerId=5480, firstName=Everett, lastName=Johnson, gender=M]

Customer [customerId=26030, firstName=Dominique, lastName=Davis, gender=M]

Customer [customerId=41922, firstName=Brice, lastName=Martinez, gender=M]

Customer [customerId=47265, firstName=Iva, lastName=Wilson, gender=F]

More Information

Here is the high level page describing the Pivotal HD & HAWQ technology.http://blog.gopivotal.com/products/pivotal-hd-ga

This page dives deeper into the PHD VM with a walkthrough from data loading, map reduce and SQL queries.

http://pivotalhd.cfapps.io/getting-started/pivotalhd-vm.html

Finally, the following link is the direct download location of the VM discussed above above.

http://bitcast-a.v1.o1.sjc1.bitgravity.com/greenplum/pivotal-sw/pivotalhd_singlenodevm_101_v1.7z

Friday 13 September 2013

Greenplum external table accessing GemFire region

To complete the Greenplum external table blogs , the last one I was created is an external table querying GemFire region data as shown below.

Previous Blogs

http://theblasfrompas.blogspot.com.au/2013/09/greenplum-command-based-web-external.html

http://theblasfrompas.blogspot.com.au/2013/09/using-oracles-sqlplus-with-greenplum.html

External Table Querying GemFire region

run.sh

cd /Users/gpadmin/demos/gemfire-externaltable

source env_setup.sh

java -cp $CLASSPATH vmware.au.se.gf7.query.QueryGemFireEmps

exttab.sql

Java Code

Previous Blogs

http://theblasfrompas.blogspot.com.au/2013/09/greenplum-command-based-web-external.html

http://theblasfrompas.blogspot.com.au/2013/09/using-oracles-sqlplus-with-greenplum.html

External Table Querying GemFire region

run.sh

cd /Users/gpadmin/demos/gemfire-externaltable

source env_setup.sh

java -cp $CLASSPATH vmware.au.se.gf7.query.QueryGemFireEmps

exttab.sql

drop external table emps_from_gemfire; CREATE EXTERNAL WEB TABLE emps_from_gemfire (empno int, name character varying(20), job character varying(20), deptno int) EXECUTE '/Users/gpadmin/demos/gemfire-externaltable/run.sh' on host FORMAT 'TEXT' (DELIMITER ',');Output

gpadmin=# select * from emps_from_gemfire; empno | name | job | deptno -------+----------+-----------+-------- 7369 | SMITH | CLERK | 20 7380 | BLACK | CLERK | 40 7377 | ADAM | CLERK | 20 7371 | APICELLA | SALESMAN | 10 7374 | LUCAS | SALESMAN | 10 7381 | BROWN | SALESMAN | 40 7373 | SIENA | CLERK | 40 7376 | ADRIAN | CLERK | 20 7370 | APPLES | MANAGER | 10 7375 | ROB | CLERK | 30 7379 | FRANK | CLERK | 10 7372 | LUCIA | PRESIDENT | 30 7378 | SALLY | MANAGER | 20 (13 rows) Time: 1078.169 ms

Java Code

package vmware.au.se.gf7.query;

import java.util.Collection;

import java.util.Iterator;

import vmware.au.se.gf7.deptemp.beans.Employee;

import com.gemstone.gemfire.cache.client.ClientCache;

import com.gemstone.gemfire.cache.client.ClientCacheFactory;

import com.gemstone.gemfire.cache.query.FunctionDomainException;

import com.gemstone.gemfire.cache.query.NameResolutionException;

import com.gemstone.gemfire.cache.query.Query;

import com.gemstone.gemfire.cache.query.QueryInvocationTargetException;

import com.gemstone.gemfire.cache.query.QueryService;

import com.gemstone.gemfire.cache.query.SelectResults;

import com.gemstone.gemfire.cache.query.TypeMismatchException;

public class QueryGemFireEmps

{

public static final String REGION_NAME = "employees";

public ClientCache cache = null;

public QueryGemFireEmps()

{

cache = new ClientCacheFactory()

.set("name", "GreenPlumGemFireClient")

.set("cache-xml-file", "client.xml")

.set("log-level", "error")

.create();

}

public void run () throws FunctionDomainException, TypeMismatchException, NameResolutionException, QueryInvocationTargetException

{

QueryService queryService = cache.getQueryService();

Query query = queryService.newQuery("SELECT * FROM /" + REGION_NAME);

Object result = query.execute();

Collection<?> collection = ((SelectResults<?>)result).asList();

Iterator<?> iter = collection.iterator();

while (iter.hasNext())

{

Employee emp = (Employee) iter.next();

System.out.println(emp.toCSVFormat());

}

cache.close();

}

public static void main(String[] args) throws Exception

{

QueryGemFireEmps emps = new QueryGemFireEmps();

emps.run();

}

}

Tuesday 10 September 2013

Using Oracle's SQLPlus with Greenplum external tables

I previously blogged about using a java client to access oracle tables from Greenplum external tables. That demo below is handy for any SQL or RDBMS so a generic solution.

http://theblasfrompas.blogspot.com.au/2013/09/greenplum-command-based-web-external.html

Here is a simpler example this time using sqlplus knowing I am only accessing oracle tables here.

run.sh

http://theblasfrompas.blogspot.com.au/2013/09/greenplum-command-based-web-external.html

Here is a simpler example this time using sqlplus knowing I am only accessing oracle tables here.

run.sh

cd /Users/gpadmin/demos/sqlplus-externaltable source setup.sh sqlplus -s scott/tiger@10.32.243.155:1521/ora11gr2 <<! @emps.sql !emps.sql

set feedback off set heading off set pagesize 0 select empno||'|'||ename||'|'||deptno from emp /exttab.sql

drop external table oracle_emps_with_sqlplus; CREATE EXTERNAL WEB TABLE oracle_emps_with_sqlplus (empno int, ename character varying(20), deptno int) EXECUTE '/Users/gpadmin/demos/sqlplus-externaltable/run.sh' on host FORMAT 'TEXT' (DELIMITER '|');Example

gpadmin=# select * from oracle_emps_with_sqlplus where deptno = 30; empno | ename | deptno -------+--------+-------- 7499 | ALLEN | 30 7521 | WARD | 30 7654 | MARTIN | 30 7698 | BLAKE | 30 7844 | TURNER | 30 7900 | JAMES | 30 (6 rows) Time: 88.829 ms

Greenplum : Command-based Web External Tables accessing Oracle

Command based external tables allow you to execute a shell command or script to return data. These make then ideal for consuming data from external systems. In this example we create an external table that connects to Oracle using JDBC to query EMP data.

The example below is based on a Java Class which allows you to connect to any RDBMS and run any SQL query with a list of comma separated columns defined as parameters.

1. Create a script which simply runs a Java Class which return data from a query to any RDBMS.

run.sh

java -cp $CLASSPATH /Users/gpadmin/demos/jdbc-externaltable/ojdbc6.jar:/Users/gpadmin/demos/jdbc-externaltable/jdbc_external.jar:. pivotal.au.greenplum.externaltable.InvokeQuery "oracle.jdbc.OracleDriver" "jdbc:oracle:thin:@10.32.243.125:1521/ora11gr2" "scott" "tiger" "|" "empno,ename,deptno" "select empno,ename,deptno from emp"

Usage as follows

Usage:

java -cp $CLASSPATH pivotal.au.greenplum.externaltable.InvokeQuery {driver-class} {url} {username} {passwd} {delimiter} {column-list} {query}

driver-class: JDBC driver class to load at runtime

url: JDBC connection URL

username: database username

passwd: database password

delimiter: What delimiter to use to separate fields

column-list: List of columns to output to console

query: SQL query to run against external RDBMS

2. Create an external table as shown below.

CREATE EXTERNAL WEB TABLE oracle_emps

(empno int, ename character varying(20), deptno int)

EXECUTE '/Users/gpadmin/demos/jdbc-externaltable/run.sh' on host

FORMAT 'TEXT' (DELIMITER '|');

3. Load into Greenplum ensuring to use the fully qualified path to run.sh on the file system.

4. Access as follows

Finally the java class is defined as follows

The example below is based on a Java Class which allows you to connect to any RDBMS and run any SQL query with a list of comma separated columns defined as parameters.

1. Create a script which simply runs a Java Class which return data from a query to any RDBMS.

run.sh

java -cp $CLASSPATH /Users/gpadmin/demos/jdbc-externaltable/ojdbc6.jar:/Users/gpadmin/demos/jdbc-externaltable/jdbc_external.jar:. pivotal.au.greenplum.externaltable.InvokeQuery "oracle.jdbc.OracleDriver" "jdbc:oracle:thin:@10.32.243.125:1521/ora11gr2" "scott" "tiger" "|" "empno,ename,deptno" "select empno,ename,deptno from emp"

Usage as follows

Usage:

java -cp $CLASSPATH pivotal.au.greenplum.externaltable.InvokeQuery {driver-class} {url} {username} {passwd} {delimiter} {column-list} {query}

driver-class: JDBC driver class to load at runtime

url: JDBC connection URL

username: database username

passwd: database password

delimiter: What delimiter to use to separate fields

column-list: List of columns to output to console

query: SQL query to run against external RDBMS

2. Create an external table as shown below.

CREATE EXTERNAL WEB TABLE oracle_emps

(empno int, ename character varying(20), deptno int)

EXECUTE '/Users/gpadmin/demos/jdbc-externaltable/run.sh' on host

FORMAT 'TEXT' (DELIMITER '|');

3. Load into Greenplum ensuring to use the fully qualified path to run.sh on the file system.

gpadmin=# \i exttab.sql; DROP EXTERNAL TABLE Time: 13.052 ms CREATE EXTERNAL TABLE Time: 5.190 ms

4. Access as follows

gpadmin=# select * from oracle_emps; empno | ename | deptno -------+--------+-------- 7369 | SMITH | 20 7499 | ALLEN | 30 7521 | WARD | 30 7566 | JONES | 20 7654 | MARTIN | 30 7698 | BLAKE | 30 7782 | CLARK | 10 7788 | SCOTT | 20 7839 | KING | 10 7844 | TURNER | 30 7876 | ADAMS | 20 7900 | JAMES | 30 7902 | FORD | 20 7934 | MILLER | 10 (14 rows) Time: 557.705 ms gpadmin=# select * from oracle_emps where deptno = 20; empno | ename | deptno -------+-------+-------- 7369 | SMITH | 20 7566 | JONES | 20 7788 | SCOTT | 20 7876 | ADAMS | 20 7902 | FORD | 20 (5 rows) Time: 543.120 ms

Finally the java class is defined as follows

package pivotal.au.greenplum.externaltable;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class InvokeQuery

{

private String url;

private String username;

private String passwd;

private String columnList;

private String delimeter;

private String driverClassName;

private String query;

private Connection conn = null;

public InvokeQuery()

{

}

private void run (String[] args) throws SQLException

{

driverClassName = args[0];

url = args[1];

username = args[2];

passwd = args[3];

delimeter = args[4];

columnList = args[5];

query = args[6];

Statement stmt = null;

ResultSet rset = null;

String[] columns = splitColumns(columnList);

try

{

conn = getConnection(driverClassName);

stmt = conn.createStatement();

rset = stmt.executeQuery(query);

int size = columns.length;

while (rset.next())

{

int i = 0;

// go through columns and output data

for (String column: columns)

{

i++;

if (i < size)

{

System.out.print(rset.getString(column) + "" + delimeter);

}

else

{

System.out.print(rset.getString(column) + "\n");

}

}

}

}

catch (Exception e)

{

e.printStackTrace();

}

finally

{

if (rset != null)

{

rset.close();

}

if (stmt != null)

{

stmt.close();

}

if (conn != null)

{

conn.close();

}

}

}

private String[] splitColumns (String columArray)

{

return columArray.split(",");

}

private Connection getConnection(String driverClass) throws SQLException, ClassNotFoundException

{

Class.forName(driverClassName);

conn = DriverManager.getConnection(url, username, passwd);

return conn;

}

public static void main(String[] args) throws Exception

{

InvokeQuery invokeQuery = new InvokeQuery();

if (args.length != 7)

{

System.out.println("Less then 7 arguments provided, usage as follows");

System.out.println("\nUsage: \n\n\tjava pivotal.au.greenplum.externaltable.InvokeQuery <driver-class> <url> <username> <passwd> <delimeter> <colum-list> <query> \n");

System.exit(-1);

}

invokeQuery.run(args);

}

}

Thursday 5 September 2013

Pivotal SQLFire - JDBC Client Failover URL using secondary-locators

When you use the thin client to connect to a SQLFire locator member (rather than

directly to a SQLFire server), the thin client driver can provide automatic failover

if the initial connection to the distributed system is lost. Note, however, that this assumes the

initial connection to the specified SQLFire locator succeeds. To improve the chances

of establishing an initial connection to a SQLFire system, you can optionally

specify the address of a secondary locator in addition to the primary locator, using

the secondary-locators connection property. For

example:

jdbc:sqlfire://locator1:port1/;secondary-locators=locator2:port2

jdbc:sqlfire://locator1:port1/;secondary-locators=locator2:port2

Pivotal SQLFire : Using -sync=false for Server nodes

Specifying -sync=false (the default for locators) causes the sqlf command to return control after the member reaches "waiting" state. With -sync=true (the default for servers), the sqlf command does not return control until after all dependent members have booted and the member has finished synchronizing disk stores.

Always use

-sync=false when starting multiple members

on the same machine, especially when executing